EXTRA MATERIAL

Material in this section is optional and will not be part of the course assessment.

☕️ Dynamic optimization#

⏱ | words

Dynamic decision problems

Dynamic programming for finite horizon problems

Dynamic programming for infinite horizon problems

Dynamic decision problems#

As before, let’s start with our definition of a general optimization problem

Definition

The general form of the optimization problem is

where:

\(f(x,\theta) \colon \mathbb{R}^N \times \mathbb{R}^K \to \mathbb{R}\) is an objective function

\(x \in \mathbb{R}^N\) are decision/choice variables

\(\theta \in \mathbb{R}^K\) are parameters

\(\mathcal{D}(\theta)\) denotes the admissible set

\(g_i(x,\theta) = 0, \; i\in\{1,\dots,I\}\) where \(g_i \colon \mathbb{R}^N \times \mathbb{R}^K \to \mathbb{R}\), are equality constraints

\(h_j(x,\theta) \le 0, \; j\in\{1,\dots,J\}\) where \(h_j \colon \mathbb{R}^N \times \mathbb{R}^K \to \mathbb{R}\), are inequality constraints

\(V(\theta) \colon \mathbb{R}^K \to \mathbb{R}\) is a value function

Crazy thought

Can we let the objective function include the value function as a component?

Think of an optimization problem that repeats over and over again, for example in time

Value function (as the maximum attainable value) of the next optimization problem may be part of the current optimization problems

The parameters \(\theta\) may be adjusted to link these optimization problems together

Example

Imagine the optimization problem with \(g(x,\theta)= \sum_{t=0}^{\infty}\beta^{t}u(x,\theta)\) where \(b \in (0,1)\) to ensure the infinite sum converges. We can write

Note that the trick in the example above works because \(g(x,\theta)\) is an infinite sum

Among other things this implies that \(x\) is infinite dimension!

Let the parameter \(\theta\) to keep track time, so let \(\theta = (t, s_t)\)

Then it is possible to use the same principle for the optimization problems without infinite sums

Introduce new notation for the value function

Definition: dynamic optimization problems

The general form of the deterministic dynamic optimization problem is

where:

\(f(x,s_t) \colon \mathbb{R}^N \times \mathbb{R}^K \to \mathbb{R}\) is an instantaneous reward function

\(x \in \mathbb{R}^N\) are decision/choice variables

\(s_t \in \mathbb{R}^K\) are state variables

state transitions are given by \(s_{t+1} = g(x,s_t)\) where \(g \colon \mathbb{R}^N \times \mathbb{R}^K \to \mathbb{R}^K\)

\(\mathcal{D}_t(s_t) \subset \mathbb{R}^N\) denotes the admissible set at decision instance (time period) \(t\)

\(\beta \in (0,1)\) is a discount factor

\(V_t(s_t) \colon \mathbb{R}^K \to \mathbb{R}\) is a value function

Denote the set of maximizers of each instantaneous optimization problem as

\(x^\star_t(s_t)\) is called a policy correspondence or policy function

State space \(s_t\) is of primary importance for the economic interpretation of the problem: it encompasses all the information that the decision maker conditions their decisions on

Has to be carefully founded in economic theory and common sense

Similarly the transition function \(g(x,s_t)\) reflects the exact beliefs of the decision maker about how the future state is determined and has direct implications for the optimal decisions

Note

In the formulation above, as seen from transition function \(g(x,s_t)\), we implicitly assume that the history of states from some initial time period \(t_0\) is not taken into account. Decision problems where only the current state is relevant for the future are called Markovian decision problems

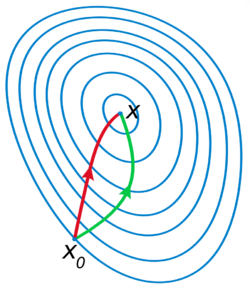

Dynamic programming and Bellman principle of optimality#

An optimal policy has a property that whatever the initial state and initial decision are, the remaining decisions must constitute an optimal policy with regard to the state resulting from the first decision.

📖 Bellman, 1957 “Dynamic Programming”

Main idea

Breaking the problem into sequence of small problems

Dynamic programming is recursive method for solving sequential decision problems

📖 Rust 2006, New Palgrave Dictionary of Economics

In computer science the meaning of the term is broader: DP is a general algorithm design technique for solving problems with overlapping sub-problems

Thus, the sequential decision problem is broken into current decision and the future decisions problems, and solved recursively

The solution can be computed through backward induction which is the process of solving a sequential decision problem from the later periods to the earlier ones, this is the main algorithm used in dynamic programming (DP)

Embodiment of the recursive way of modeling sequential decisions is Bellman equation

Definition

Bellman equation is a functional equation in an unknown function \(V(\cdot)\) which embodies a dynamic optimization problem and has the form

expectation \(\mathbb{E}\{\cdot\}\) is taken over the distribution of the next period state conditional on current state and decision when the state transitions are given by a stochastic process

the optimal choices (policy correspondence) are revealed along the solution of the Bellman equation as decisions which solve the maximization problem in the right hand side, i.e.

DP is a the main tool in analyzing modern micro and macto economic models

DP provides a framework to study decision making over time and under uncertainty and can accommodate:

learning and human capital formation

wealth accumulation

health dynamics

strategic interactions between agents (game theory)

market interactions (equilibrium theory)

etc

Many important problems and economic models are analyzed and solved using dynamic programming:

Dynamic models of labor supply

Job search

Human capital accumulation

Health process, insurance and long term care

Consumption/savings choices

Durable consumption

Growth models

Heterogeneous agents models

Overlapping generation models

Origin of the term Dynamic Programming

The 1950’s were not good years for mathematical research. We had a very interesting gentleman in Washington named Wilson. He was Secretary of Defence, and he actually had a pathological fear and hatred of the word “research”.

I’m not using the term lightly; I’m using it precisely. His face would suffuse, he would turn red, and he would get violent if people used the term, research, in his presence. You can imagine how he felt, then, about the term, mathematical.

Hence, I felt I had to do something to shield Wilson and the Air Force from the fact that I was really doing mathematics inside the RAND Corporation.

What title, what name, could I choose?

In the first place, I was interested in planning, in decision-making, in thinking. But planning, is not a good word for various reasons. I decided therefore to use the word, “programming”.

I wanted to get across the idea that this was dynamic, this was multistage, this was time-varying.

I thought, let’s kill two birds with one stone. Let’s take a word which has an absolutely precise meaning, namely dynamic, in the classical physical sense.

It also has a very interesting property as an adjective, and that is it’s impossible to use the word, dynamic, in the pejorative sense.

Thus, I thought dynamic programming was a good name. It was something not even a Congressman could object to. So I used it as an umbrella for my activities.

📖 Bellman’s autobiography “The Eye of the Hurricane”

Finite and infinite horizon problems#

Although dynamic programming primarily deals with infinite horizon problems, it can also be applied to the finite horizon problems where \(T < \infty\)

Terms backwards induction and dynamic programming are associated by many authors respectively with finite and infinite horizon problems

Our definition of a dynamic optimization problem contains elements of both finite and infinite horizon problem setup, and needs more detail

Finite horizon problems

Time is discrete and finite, \(t \in \{0,1,\dots,T\}\) where \(T < \infty\)

Parameter vector \(\theta\) indeed contains \(t\) such that the value and policy functions along with other elements of the model do depend on \(t\), i.e. may differ between time periods

“Bellman equation” is a (common!) misuse of the term, because with time subscripts for the value function, it is not a functional equation any more

However, the term “Bellman equation” is still used for the finite horizon problems to denote the following expression:

Period \(t=T\) is referred to as the terminal period, and the value function at this period is called the terminal value function

Because there is no next period in the terminal period, \(V_{T+1}(s_{T+1}) = 0\) for all \(s_T\), giving the expression for the Bellman equation above

Infinite horizon problems

Time is discrete and infinite, \(t \in \{0,1,\dots,\infty\}\), sometimes denoted as \(T = \infty\)

Parameter vector \(\theta\) does not contain \(t\) and so the value and policy functions can not depend on \(t\), and so other elements of the model

In other words, the solution of the model in this case has to be time invariant

Bellman equation is a proper functional equation, which together with the policy function, describes the optimal decision rule in the long term. In some sense, the solution to the infinite dimensional problem is one pair \((V(s), x^\star(s))\), whereas in the general setup both elements of the solution are tuples

Infinite horizon and stochastic dynamic optimization problems#

\(T = \infty\)

subscripts \(t\) can be dropped

solution of the model \(V(s), x^\star(s)\) is time invariant

Bellman equation is a proper functional equation where the value function \(V(s)\) is an unknown

In infinite horizon the dynamic optimization problem

where \(s'\) is the next period state, becomes a fixed point problem of a Bellman operator, i.e. the problem of solving a functional equation.

Definition

Let \(B(S)\) denote a set of all bounded real-valued functions on a set \(S \subset \mathbb{R}^K\).

Bellman operator is a mapping from \(B(S)\) to itself defined as

where:

\(f(x,s) \colon \mathbb{R}^N \times S \to \mathbb{R}\) is an instantaneous reward function

\(x \in \mathbb{R}^N\) are decision/choice variables

\(s \in S \subset \mathbb{R}^K\) are state variables

state transitions are given by \(s' = g(x,s)\) where \(g \colon \mathbb{R}^N \times S \to S\)

\(\mathcal{D}(s) \subset \mathbb{R}^N\) denotes the admissible set

\(\beta \in (0,1)\) is a discount factor

\(V(s) \colon S \to \mathbb{R}\) is a value function

Solution of the Bellman equation is given by a fixed point of the Bellman operator, i.e. such function \(V(\cdot)\) which is mapped to itself by the Bellman operator, and the corresponding policy function

Theory of dynamic programming#

Definition: contraction mapping

Let \((S,\rho)\) be a complete metric space, i.e. a metric space where every Cauchy sequence converges to a point in \(S\).

Let \(T: S \rightarrow S\) denote an operator mapping \(S\) to itself. \(T\) is called a contraction on \(S\) with modulus \(\lambda\) if \(0 \le \lambda < 1\) and

Contraction mapping brings points in its domain “closer” to each other!

Example

What is the value of annuity \(V\) paying regular payments \(c\) forever?

Let \(r\) be the interest rate, then the value of annuity is given by

Equivalently with \(\beta = \frac{1}{1+r}\)

Can reformulate recursively (as “Bellman equation” without choice)

with the corresponding “Bellman operator”

Is \(T(V)\) a contraction?

Yes as long as long as \(\beta < 1\)!

contraction mapping under Euclidean norm

modulus of the contraction is \(\beta\)

Contractions are invaluable because of uniqueness of their fixed point and a surefire algorithm to compute them

Banach contraction mapping theorem (fixed point theorem)

Let \((S,\rho)\) be a complete metric space with a contraction mapping \(T: S \rightarrow S\). Then

\(T\) admits a unique fixed-point \(V^{\star} \in S \colon T(V^{\star}) = V^{\star}\)

\(V^{\star}\) can be found by repeated application of the operator \(T\), i.e. \(T^n(V) \rightarrow V^{\star}\) as \(n\rightarrow \infty\)

In other words, the fixed point can be found by successive approximations from any starting point \(\rightarrow\) commonly known in economics as value function iterations (VFI) algorithm

Value function iterations (VFI) algorithm

Start with a guess for value function \(V_0\), \(i=0\)

Apply Bellman operator to \(V_i\) and increment \(i\)

Repeat until convergence, i.e. \(\| V_{i+1}-V_i \|<\epsilon\) for some small $\e

Show code cell content

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

class annuity():

def __init__(self,c=1,beta=.9):

self.c = c # Annual payment

self.beta = beta # Discount factor

self.analytic = c/(1-beta) # compute analytic solution right away

def bellman(self,V):

'''Bellman equation'''

return self.c + self.beta*V

def solve(self, maxiter = 1000, tol=1e-4, verbose=False):

'''Solves the model using successive approximations'''

if verbose: print('{:<4} {:>15} {:>15}'.format('Iter','Value','Error'))

V0=0

for i in range(maxiter):

V1=self.bellman(V0)

if verbose: print('{:<4d} {:>15.8f} {:>15.8f}'.format(i,V1,V1-self.analytic))

if abs(V1-V0) < tol:

break

V0=V1

else: # when i went up to maxiter

print('No convergence: maximum number of iterations achieved!')

return V1

a = annuity(c=10,beta=0.92)

a.solve(verbose=True)

print('Numeric solution is ',a.solve())

Iter Value Error

0 10.00000000 -115.00000000

1 19.20000000 -105.80000000

2 27.66400000 -97.33600000

3 35.45088000 -89.54912000

4 42.61480960 -82.38519040

5 49.20562483 -75.79437517

6 55.26917485 -69.73082515

7 60.84764086 -64.15235914

8 65.97982959 -59.02017041

9 70.70144322 -54.29855678

10 75.04532776 -49.95467224

11 79.04170154 -45.95829846

12 82.71836542 -42.28163458

13 86.10089619 -38.89910381

14 89.21282449 -35.78717551

15 92.07579853 -32.92420147

16 94.70973465 -30.29026535

17 97.13295588 -27.86704412

18 99.36231941 -25.63768059

19 101.41333385 -23.58666615

20 103.30026715 -21.69973285

21 105.03624577 -19.96375423

22 106.63334611 -18.36665389

23 108.10267842 -16.89732158

24 109.45446415 -15.54553585

25 110.69810702 -14.30189298

26 111.84225846 -13.15774154

27 112.89487778 -12.10512222

28 113.86328756 -11.13671244

29 114.75422455 -10.24577545

30 115.57388659 -9.42611341

31 116.32797566 -8.67202434

32 117.02173761 -7.97826239

33 117.65999860 -7.34000140

34 118.24719871 -6.75280129

35 118.78742281 -6.21257719

36 119.28442899 -5.71557101

37 119.74167467 -5.25832533

38 120.16234070 -4.83765930

39 120.54935344 -4.45064656

40 120.90540517 -4.09459483

41 121.23297275 -3.76702725

42 121.53433493 -3.46566507

43 121.81158814 -3.18841186

44 122.06666109 -2.93333891

45 122.30132820 -2.69867180

46 122.51722194 -2.48277806

47 122.71584419 -2.28415581

48 122.89857665 -2.10142335

49 123.06669052 -1.93330948

50 123.22135528 -1.77864472

51 123.36364686 -1.63635314

52 123.49455511 -1.50544489

53 123.61499070 -1.38500930

54 123.72579144 -1.27420856

55 123.82772813 -1.17227187

56 123.92150988 -1.07849012

57 124.00778909 -0.99221091

58 124.08716596 -0.91283404

59 124.16019268 -0.83980732

60 124.22737727 -0.77262273

61 124.28918709 -0.71081291

62 124.34605212 -0.65394788

63 124.39836795 -0.60163205

64 124.44649851 -0.55350149

65 124.49077863 -0.50922137

66 124.53151634 -0.46848366

67 124.56899504 -0.43100496

68 124.60347543 -0.39652457

69 124.63519740 -0.36480260

70 124.66438161 -0.33561839

71 124.69123108 -0.30876892

72 124.71593259 -0.28406741

73 124.73865798 -0.26134202

74 124.75956535 -0.24043465

75 124.77880012 -0.22119988

76 124.79649611 -0.20350389

77 124.81277642 -0.18722358

78 124.82775431 -0.17224569

79 124.84153396 -0.15846604

80 124.85421124 -0.14578876

81 124.86587435 -0.13412565

82 124.87660440 -0.12339560

83 124.88647605 -0.11352395

84 124.89555796 -0.10444204

85 124.90391333 -0.09608667

86 124.91160026 -0.08839974

87 124.91867224 -0.08132776

88 124.92517846 -0.07482154

89 124.93116418 -0.06883582

90 124.93667105 -0.06332895

91 124.94173736 -0.05826264

92 124.94639837 -0.05360163

93 124.95068650 -0.04931350

94 124.95463158 -0.04536842

95 124.95826106 -0.04173894

96 124.96160017 -0.03839983

97 124.96467216 -0.03532784

98 124.96749839 -0.03250161

99 124.97009852 -0.02990148

100 124.97249063 -0.02750937

101 124.97469138 -0.02530862

102 124.97671607 -0.02328393

103 124.97857879 -0.02142121

104 124.98029248 -0.01970752

105 124.98186909 -0.01813091

106 124.98331956 -0.01668044

107 124.98465399 -0.01534601

108 124.98588167 -0.01411833

109 124.98701114 -0.01298886

110 124.98805025 -0.01194975

111 124.98900623 -0.01099377

112 124.98988573 -0.01011427

113 124.99069487 -0.00930513

114 124.99143928 -0.00856072

115 124.99212414 -0.00787586

116 124.99275421 -0.00724579

117 124.99333387 -0.00666613

118 124.99386716 -0.00613284

119 124.99435779 -0.00564221

120 124.99480917 -0.00519083

121 124.99522443 -0.00477557

122 124.99560648 -0.00439352

123 124.99595796 -0.00404204

124 124.99628132 -0.00371868

125 124.99657882 -0.00342118

126 124.99685251 -0.00314749

127 124.99710431 -0.00289569

128 124.99733597 -0.00266403

129 124.99754909 -0.00245091

130 124.99774516 -0.00225484

131 124.99792555 -0.00207445

132 124.99809150 -0.00190850

133 124.99824418 -0.00175582

134 124.99838465 -0.00161535

135 124.99851388 -0.00148612

136 124.99863277 -0.00136723

137 124.99874215 -0.00125785

138 124.99884277 -0.00115723

139 124.99893535 -0.00106465

Numeric solution is 124.9989353524933

Answer by the geometric series formula, assuming \(\beta<1\)

print(f'Analytic solution is {a.analytic}')

Analytic solution is 125.00000000000006

When is Bellman operator a contraction?

Bellman operator \(T: U \rightarrow U\) from functional space \(U\) to itself

metric space \((U,d_{\infty})\) with uniform/infinity/sup norm (max abs distance between functions over their domain)

Blackwell sufficient conditions for contraction

Let \(X \subseteq \mathbb{R}^n\) and \(B(x)\) be the space of bounded functions \(f: X \rightarrow \mathbb{R}\) defined on \(X\). Suppose that \(T: B(X) \rightarrow B(X)\) is an operator satisfying the following conditions:

(monotonicity) For any \(f,g \in B(X)\) and \(f(x) \le g(x)\) for all \(x\in X\) implies \(T(f)(x) \le T(g)(x)\) for all \(x\in X\),

(discounting) There exists \(\beta \in (0,1)\) such that

Then \(T\) is a contraction mapping with modulus \(\beta\).

Monotonicity of Bellman equation follows trivially due to maximization in \(T(V)(x)\)

Discounting: satisfied by elementary argument when \(\beta<1\)

Bellman operator is contraction mapping by Blackwell condition as long as the value function is bounded and the discount factor is less than one

In practical application with the upper bound on the state space, the value function is generally bounded

In many applications the norm \(\rho\) in the metric space can be adjusted to make the value function bounded

\(\Rightarrow\)

unique solution

VFI algorithm is globally convergent

does not depend on the numerical implementation of the Bellman operator

Other classes of dynamic optimization problems#

Classification of dynamic problems by various criteria:

Nature of time

discrete time: here

continuous time: studied in the calculus of variation, optimal control theory and other branches of math

Uncertainty in the beliefs of the decision makers

deterministic problems: all transition rules are given by laws of motion, i.e. mappings from states and choices to next period states

stochastic problems: some transitions rules are given by conditional densities or transition probabilities from current to next period states

Nature of choice space

discrete: well suited for numerical full enumeration methods (grid search)

continuous: optimization theory of this course applied in full

mixed discrete-continuous: math is more complicated and interesting

Nature of state space

discrete: well suited for numerical methods

continuous: most often convert to discrete by discretization (grids)

mixed discrete-continuous

Multiple decision making processes

single agent models: here

equilibrium models: each decision makers takes environment variables as given, yet the equilibrium environment is formed by the aggregate behavior

multiple agents models: strategic interaction between decision makers, very hard because the joint Bellman operator is no more a contraction

Corresponding to many types of dynamic optimization problems there are many-many variations of the solution approaches

For finite horizon dynamic problems:

Standard backwards induction: solving Bellman equation sequentially

Backwards induction using F.O.C. of Bellman maximization problem (for problems with continuous choice)

Finite horizon version of endogenous gridpoint method (for a particular class of problems)

For infinite horizon dynamic problems:

Value function iterations (successive approximations to solve for the fixed point of Bellman operator)

Time iterations (successive approximations to solve for the fixed point of Coleman-Reffett operator in policy function space)

Policy iteration method (Howard’s policy improvement algorithm, iterative solution for the fixed point of Bellman operator)

Newton-Kantorovich method (Newton solver for the fixed point of Bellman operator)

Endogenous gridpoint method (for a particular class of problems)

References and reading#

References

[Sundaram, 1996]: Chapter 11

Further reading and self-learning

Computer science view on DP https://www.techiedelight.com/introduction-dynamic-programming

Popular optimal stopping https://www.americanscientist.org/article/knowing-when-to-stop

For more details the inventory problem see my screencast on writing this code

For more dynamic programming and numerical methods see my Foundations of Computational Economics online course