🔬 Tutorial problems lambda#

Show code cell content

import numpy as np

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

from matplotlib import cm

from myst_nb import glue

f = lambda x: (x[0])**3 - (x[1])**3

lb,ub = -1.5,1.5

x = y = np.linspace(lb,ub, 100)

X, Y = np.meshgrid(x, y)

zs = np.array([f((x,y)) for x,y in zip(np.ravel(X), np.ravel(Y))])

Z = zs.reshape(X.shape)

a,b=1,1

# (x/a)^2 + (y/b)^2 = 1

theta = np.linspace(0, 2 * np.pi, 100)

X1 = a*np.cos(theta)

Y1 = b*np.sin(theta)

zs = np.array([f((x,y)) for x,y in zip(np.ravel(X1), np.ravel(Y1))])

Z1 = zs.reshape(X1.shape)

fig = plt.figure(dpi=160)

ax2 = fig.add_subplot(111)

ax2.set_aspect('equal', 'box')

ax2.contour(X, Y, Z, 50,

cmap=cm.jet)

ax2.plot(X1, Y1)

plt.setp(ax2, xticks=[],yticks=[])

glue("pic1", fig, display=False)

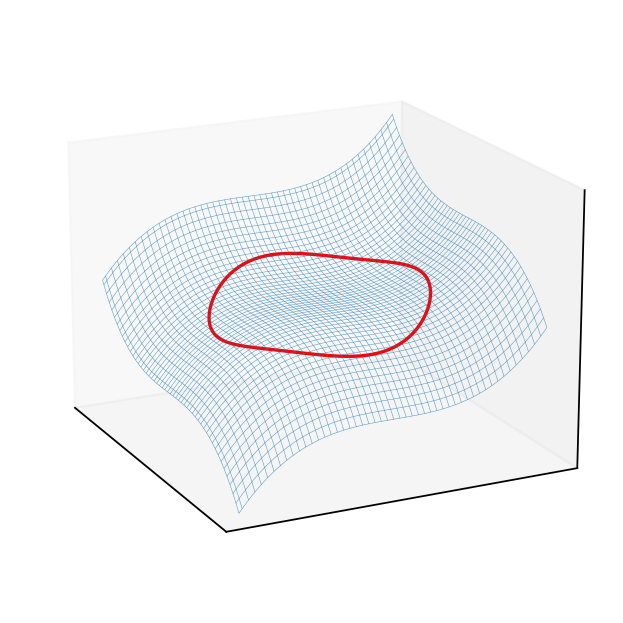

fig = plt.figure(dpi=160)

ax3 = fig.add_subplot(111, projection='3d')

ax3.plot_wireframe(X, Y, Z,

rstride=2,

cstride=2,

alpha=0.7,

linewidth=0.25)

f0 = f(np.zeros((2)))+0.1

ax3.plot(X1, Y1, Z1, c='red')

plt.setp(ax3,xticks=[],yticks=[],zticks=[])

ax3.view_init(elev=18, azim=154)

glue("pic2", fig, display=False)

f = lambda x: x[0]**3/3 - 3*x[1]**2 + 5*x[0] - 6*x[0]*x[1]

x = y = np.linspace(-10.0, 10.0, 100)

X, Y = np.meshgrid(x, y)

zs = np.array([f((x,y)) for x,y in zip(np.ravel(X), np.ravel(Y))])

Z = zs.reshape(X.shape)

a,b=4,8

# (x/a)^2 + (y/b)^2 = 1

theta = np.linspace(0, 2 * np.pi, 100)

X1 = a*np.cos(theta)

Y1 = b*np.sin(theta)

zs = np.array([f((x,y)) for x,y in zip(np.ravel(X1), np.ravel(Y1))])

Z1 = zs.reshape(X1.shape)

fig = plt.figure(dpi=160)

ax2 = fig.add_subplot(111)

ax2.set_aspect('equal', 'box')

ax2.contour(X, Y, Z, 50,

cmap=cm.jet)

ax2.plot(X1, Y1)

plt.setp(ax2, xticks=[],yticks=[])

glue("pic3", fig, display=False)

fig = plt.figure(dpi=160)

ax3 = fig.add_subplot(111, projection='3d')

ax3.plot_wireframe(X, Y, Z,

rstride=2,

cstride=2,

alpha=0.7,

linewidth=0.25)

f0 = f(np.zeros((2)))+0.1

ax3.plot(X1, Y1, Z1, c='red')

plt.setp(ax3,xticks=[],yticks=[],zticks=[])

ax3.view_init(elev=18, azim=154)

glue("pic4", fig, display=False)

\(\lambda\).1#

Solve the following constrained maximization problem using the Karush-Kuhn-Tucker method. Verify that the found stationary/critical points satisfy the second order conditions.

Standard KKT method should work for this problem.

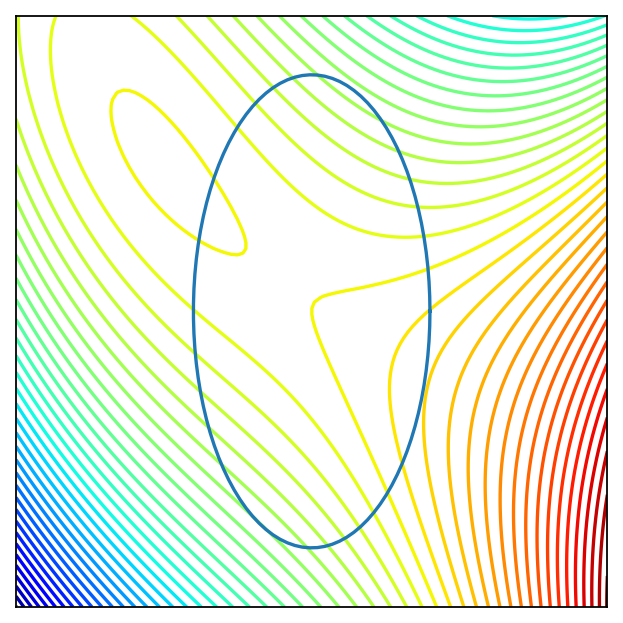

Fig. 100 Level curves of the criterion function and constraint curve.#

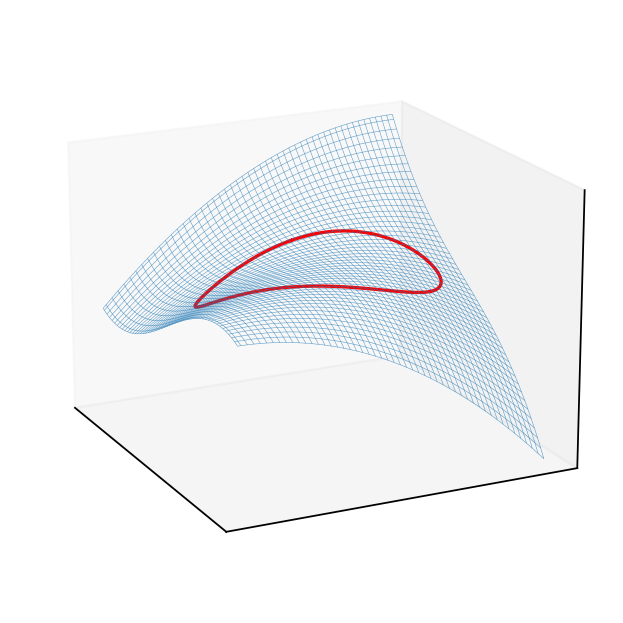

Fig. 101 3D plot of the criterion surface with the constraint curve projected to it.#

The constraint is \(g(x,y) := x^2-y^2 - 1 \le 0\). The Lagrangian function is

The necessary KKT conditions are given by the following system of equations and inequalities

To solve this system, we start from checking the two possible cases: \(\lambda=0\) and \(\lambda>0\).

Case 1. \(\lambda=0\): the constraint could not be binding. Then, the FOCs imply \(x=y=0\). The unconstrained Hessian matrix is

We have \(\det(H)=0\) and \(trace(H)=0\) so that there is no sufficient information to conclude the property of the stationary point.

Case 2. \(\lambda>0\): the constraint is binding. Then, the problem is the optimization with equality constraint, given two control variables \(N=2\) and one constraint \(K=1\). From the FOCs, we have

Since further we have the constraint \(x^2+y^2=1\), there are three cases: “\(x=0, y<0\)”, “\(x>0, y=0\)” or “\(x>0, y<0\)”.

(i) If \(x>0, y<0\), then it follows from the above conditions that

Since \(x^2+y^2=1\) and \(x>0, y<0\), we have \(x=1/\sqrt{2}, y=-1/\sqrt{2}\) and then \(\lambda= 3/(2\sqrt{2})\).

Observe that \(\mathrm{rank}(Dg)) = \mathrm{rank}((2x, 2y)) = 1\) for \(x \ne 0\) or \(y\neq0\), so the constrained qualification holds for any point on the boundary.

The bordered Hessian matrix is

Now, the bordered Hessian at \((x,y,\lambda) = (1/\sqrt{2}, -1/\sqrt{2}, 3/(2\sqrt{2}))\) is

It suffices to check the last leading principal minor. The determinant is

which has the same sign as \((-1)^K=(-1)\). Therefore, it is positive definite and we have a local minimum on the boundary.

(ii) If \(x=0, y<0\), then from \(x^2+y^2=1\), we have \(y=-1\). Also, \(\lambda=-3y/2 = 3/2\). The border Hessian is

The determinant is \(\det (H\mathcal{L})=12 >0\), which has the same sign as \((-1)^2\) and then the Hessian matrix is negative definite. Therefore, \((0, -1)\) is a local maximum.

(iii) If \(x>0, y=0\), then from \(x^2+y^2=1\), we have \(x=1\). Also, \(\lambda=3x/2 = 3/2\). The border Hessian is

The determinant is \(\det (H\mathcal{L})=12 >0\), which has the same sign as \((-1)^2\) and then the Hessian matrix is negative definite. Therefore, \((1, 0)\) is a local maximum.

Finally, the objective values for the local maximums are \(f(1,0)=f(0,-1)=1\) and note that the constrained set is compact. Therefore, these two local maximizers are also global maximizers.

\(\lambda\).2#

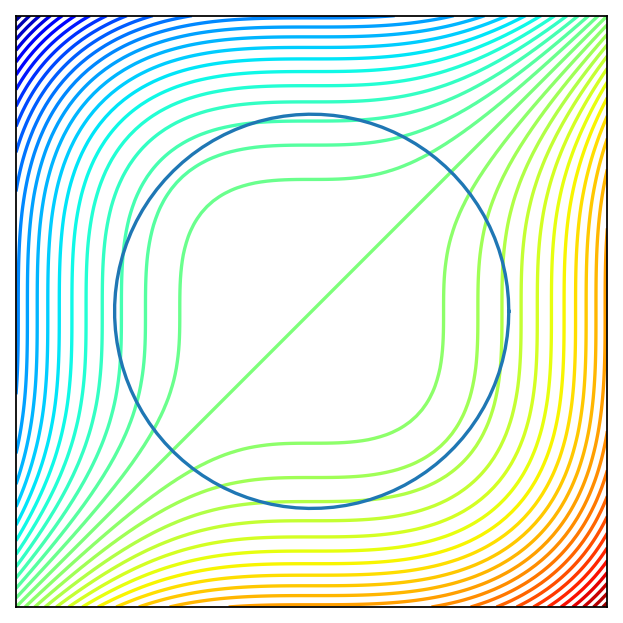

Solve the following constrained maximization problem using the Karush-Kuhn-Tucker method. Verify that the found stationary/critical points satisfy the second order conditions.

Standard KKT method should work for this problem.

The Lagrangian function is

KKT conditions are

Case 1: \(\lambda = 0\)

This is the unconstrained case with the number of variables \(N=2\). KKT conditions simplify to

Thus, \(x=0\), but then \(y^2+2>0\) for any \(y\), and therefore the inequality is violated. We conclude that \(\lambda=0\) is not a solution.

Case 2: \(\lambda > 0\)

This is a constrained case with the number of variables \(N=2\) and number of binding constraints equal \(K=1\). KKT conditions simplify to

The last equation simplifies to \((x - y)(x+y) -2xy - 2 = -2(xy+1) = 0\), and thus \(xy+1=0\). Combining the last equation with \(y + x = 0\), we have

Then, the two stationary points are \((x,y) = (1,-1)\) and \((x,y) = (-1,1)\), with \(\lambda = x/(x-y) = 1/2\) for both points.

Let’s check the second-order conditions for each of these points:

\((x,y) = (1,-1)\), \(\lambda=1/2\)

The boarded Hessian is

We first have to check \(N-K=1\) determinant of the bordered Hessian, no rows/columns to be removed:

The sign of the full determinant is the same as \((-1)^N\), the alternation sequence of one element is satisfied trivially, thus we conclude that the relevant quadratic form is negative definite, and thus point \((1,-1)\) is a local maximizer.

\((x,y) = (-1,1)\), \(\lambda=1/2\)

The boarded Hessian is

Similar to the previous point, we have

Again, we conclude that at point \((-1,1)\) the objective function attains a local maximum.

Finally, to find the global maximum, we can compare the values of the objective function at the stationary points:

\(f(1,-1) = - 1^2 = -1\)

\(f(-1,1) = - (-1)^2 = -1\)

Thus, the problem has two global maximizers which coincide with the two found local constrained maximizers \((1,-1)\) and \((-1,1)\).

\(\lambda\).3#

Roy’s identity

Consider the choice problem of a consumer endowed with strictly concave and differentiable utility function \(u \colon \mathbb{R}^N_{++} \to \mathbb{R}\) where \(\mathbb{R}^N_{++}\) denotes the set of vector in \(\mathbb{R}^N\) with strictly positive elements.

The budget constraint is given by \({\bf p}\cdot{\bf x} \le m\) where \({\bf p} \in \mathbb{R}^N_{++}\) are prices and \(m>0\) is income.

Then the demand function \(x^\star({\bf p},m)\) and the indirect utility function \(v({\bf p},m)\) (value function of the problem) satisfy the equations

Prove the statement

Verify the statement by direct calculation (i.e. by expressing the indirect utility and plugging its partials into the identity) using the following specification of utility

Envelope theorem should be useful here.

We first show the Roy’s identity.

The value function is \(v(p, m)=\max\{u(x): p \cdot x \leq m\}\) where \(u\colon \mathbb{R}^{N}_{++}\to \mathbb{R}\). The Lagrangian of the maximization problem is \(\mathcal{L}(x, \lambda, p, m) = u(x) - \lambda (\sum_{i=1}^{N}p_{i}x_{i}-m)\). The Envelope Theorem implies

Note, by the way, that here \(\lambda\) is indeed the shadow price of the budget constraint: the slope of the indirect utility along the \(m\) dimension is \(\lambda\)

It follows from the previous equations that

Next, let \(u(x)= \prod_{i=1}^{N}x_{i}^{\alpha_i}\) where \(\alpha_i>0\) for all \(i\). Since \(\log(\cdot)\) function is strictly monotone, the optimization problem is equivalent to maximize \(u(x)=\sum_{i=1}^{N}\alpha_{i}\log(x_i)\). The corresponding Lagrangian is

The first-order conditions yield

Hence, the optimal value function is

To verify Roy’s identity, observe that

Then, we have